Chroma AR: a WebAR experience for The Met

Chroma AR is the WebAR component for The Met’s recent Chroma exhibition, which tells the story of how ancient sculptures originally looked and how a team of scientists, artists, and technologists figured that out.

The app was built using 8th Wall’sWebAR JavaScript engine paired with Three.js, vanilla JavaScript, HTML, and CSS.

App experience

The app features three modes – Explore, Learn, and Play. Each mode uses a variety of special effects leveraging both the 8th Wall WebAR engine and custom

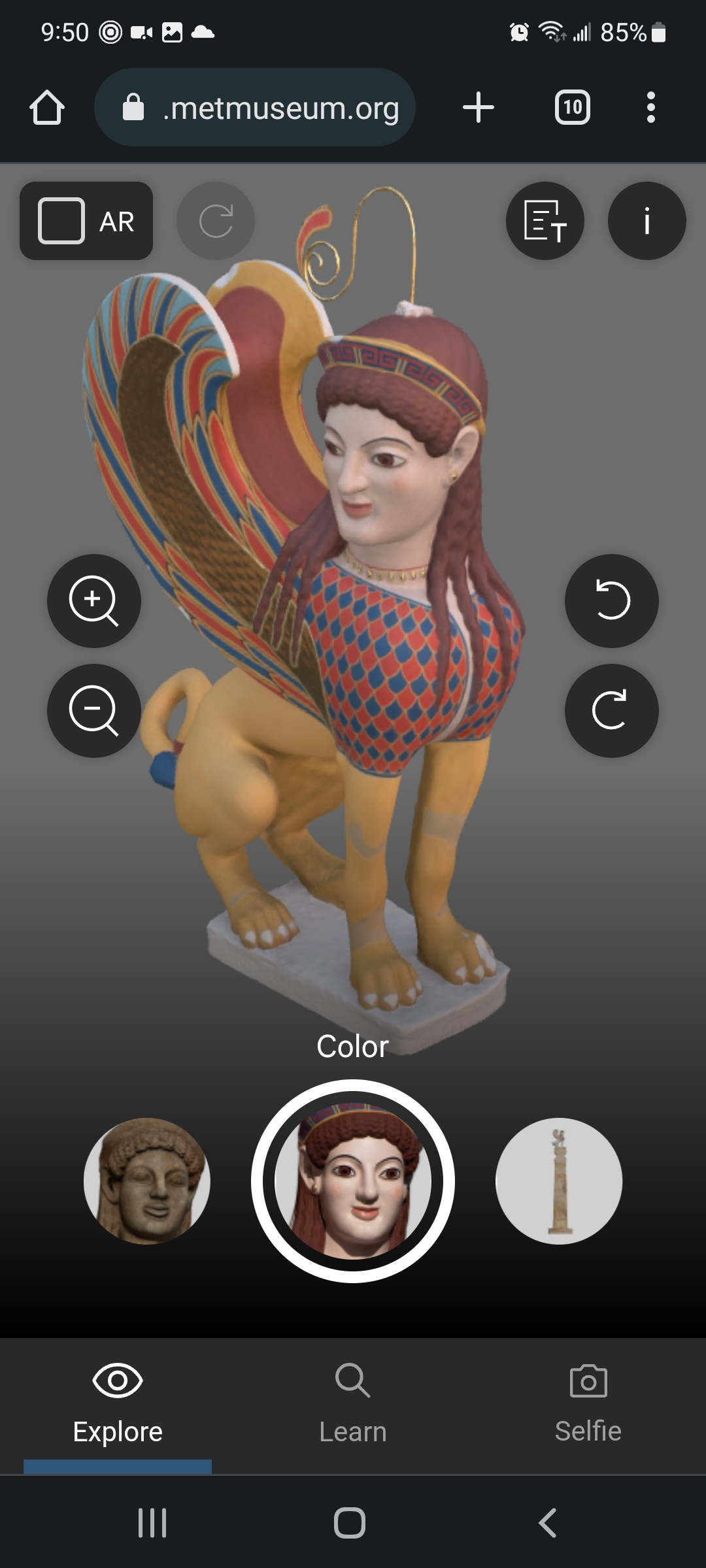

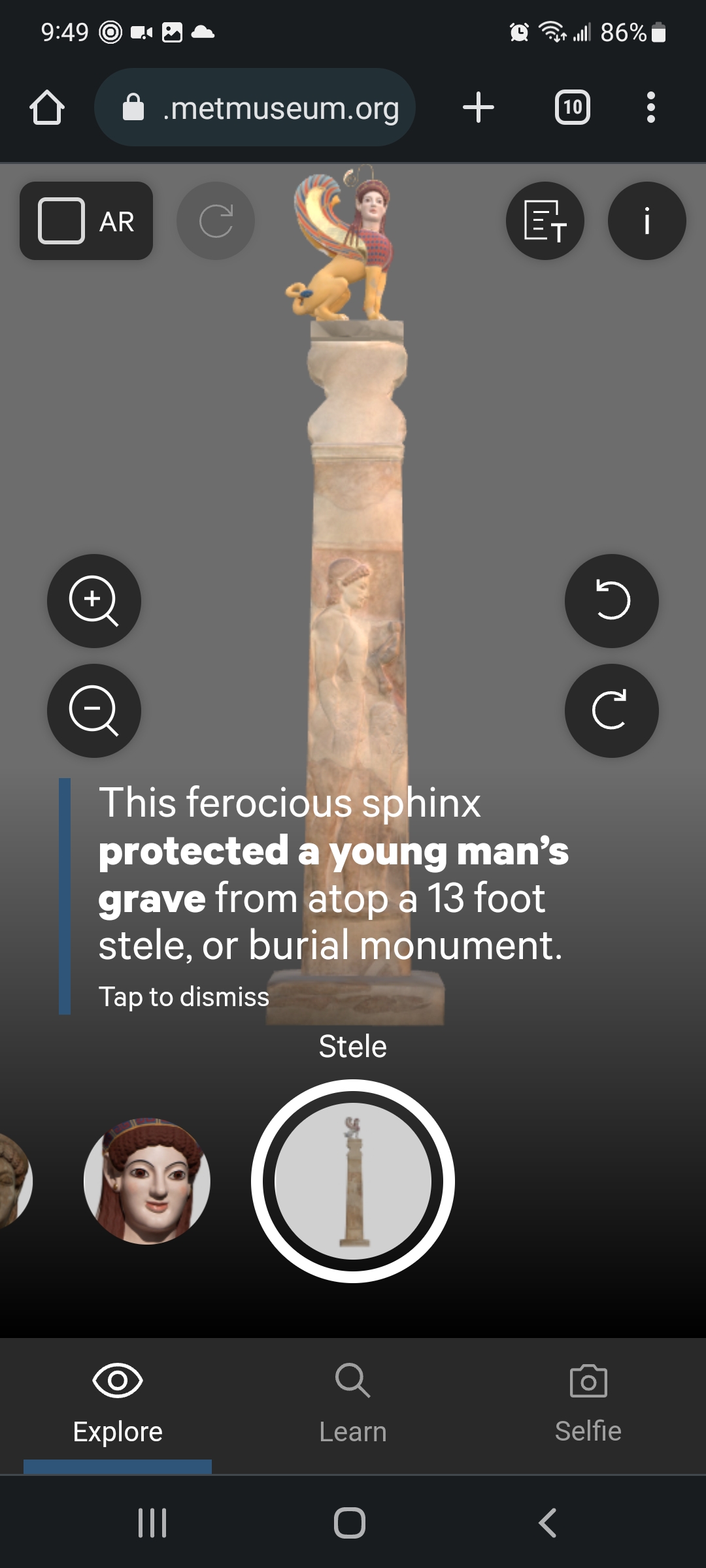

Explore mode

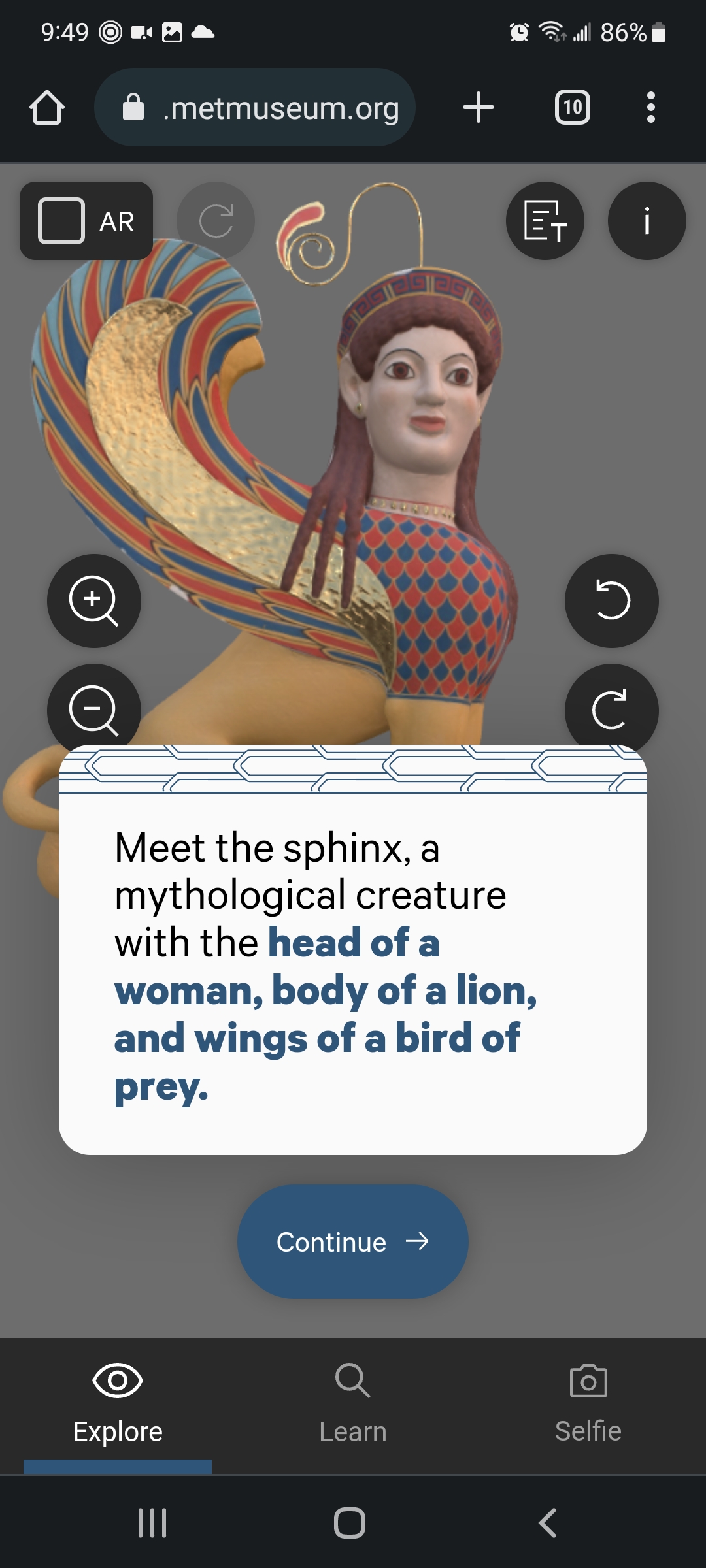

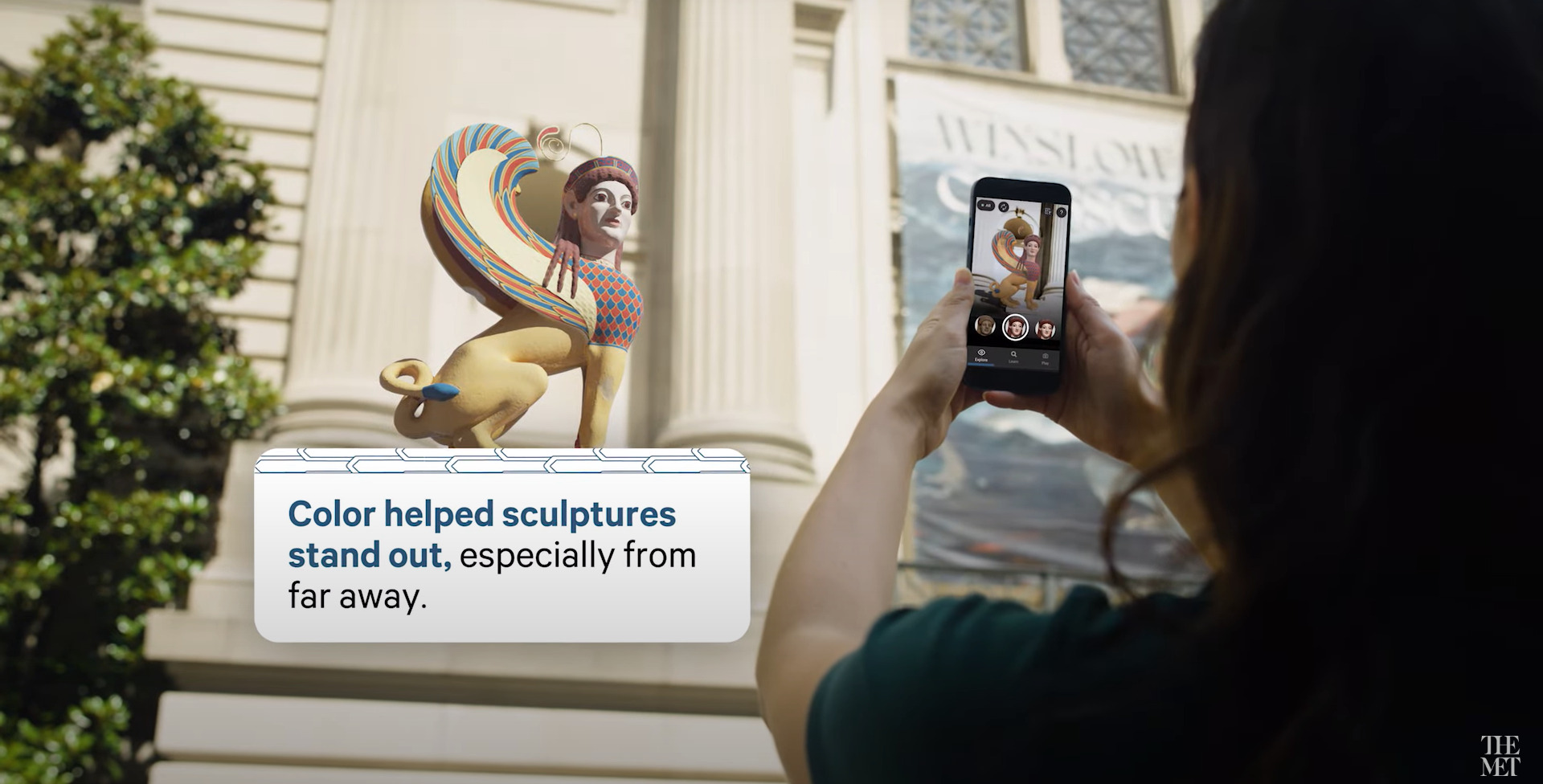

This mode uses 8th Wall’s World Tracking feature to place realistic 3D scanned models of sculptures in front of the viewer, allowing the viewer to walk around the models and even get up close to examine tiny details. Multiple “filters” are provided that show different textures and/or models, including a giant tower called a “stele” where the sphinx would’ve been placed years ago.

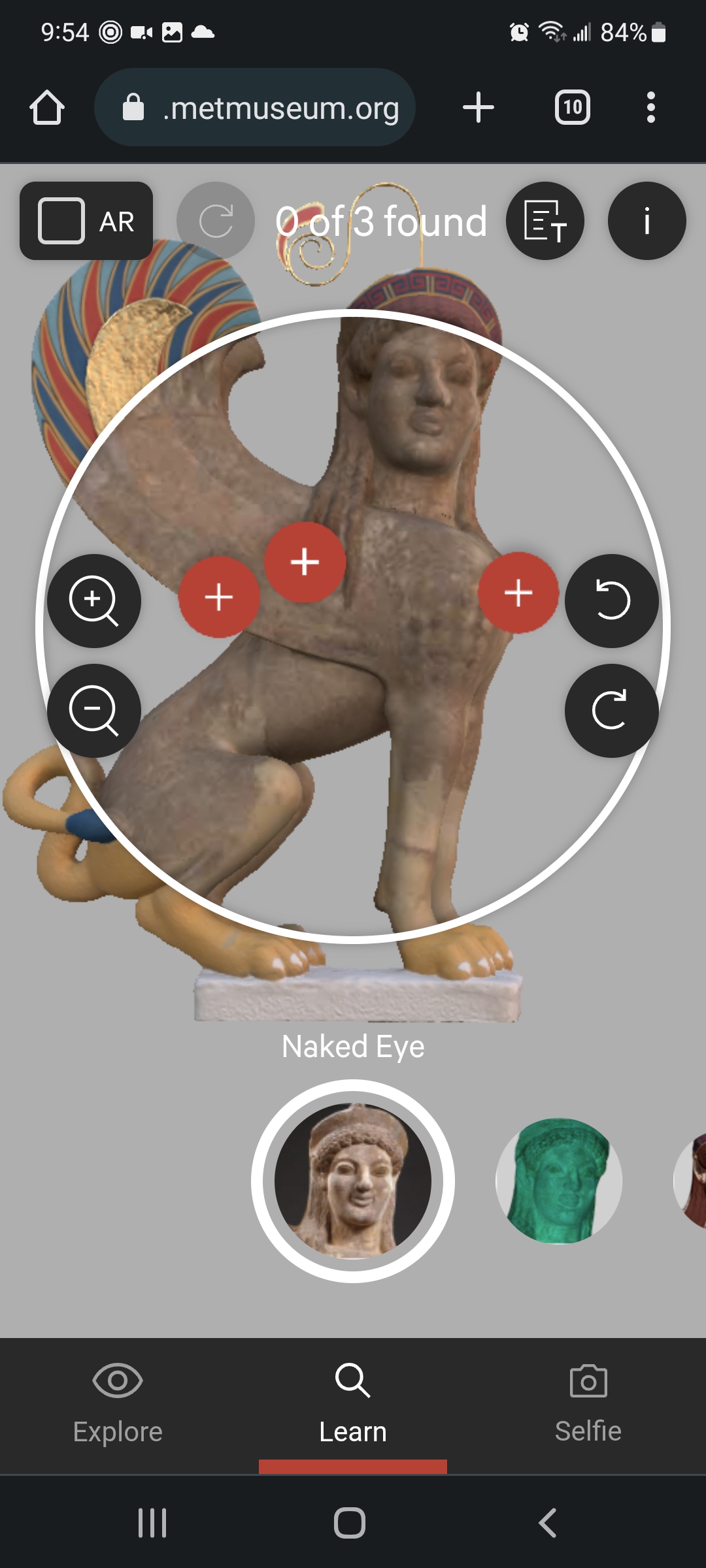

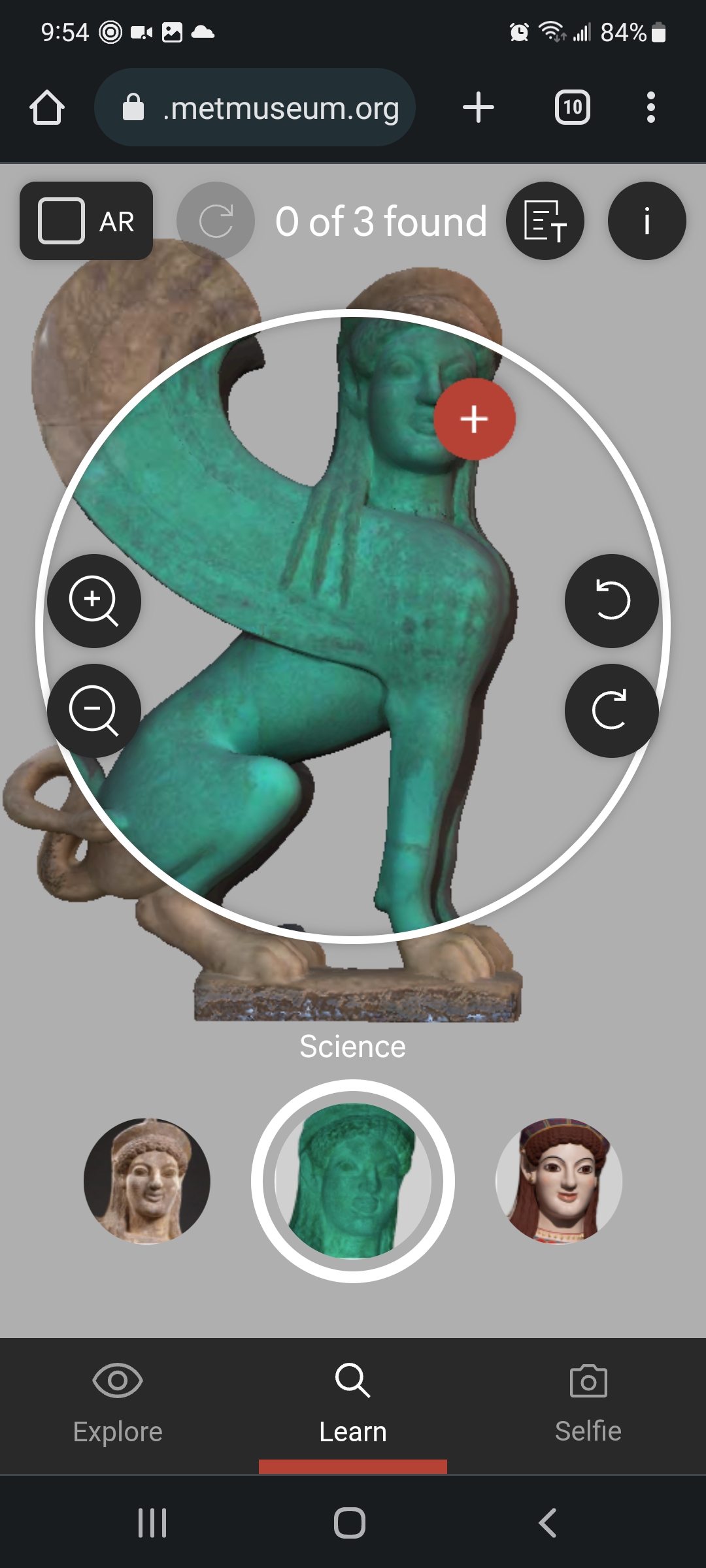

Learn mode

In this mode, a single 3D model is placed in front of the viewer along with a set of filters for them to use. Each filter applies different textures to the model’s surface to mimic the process of uncovering and recreating the sculpture’s original colors. Viewers can see the sculpture as it exists today, with multi-spectral scientific imagery, or with reconstructed paints.

Interactive hotspots are attached in different places for each filter, which open modal dialogs with interesting content when tapped. When all hotspots are found in all filters, the viewer gets a nice little reward screen.

Play mode

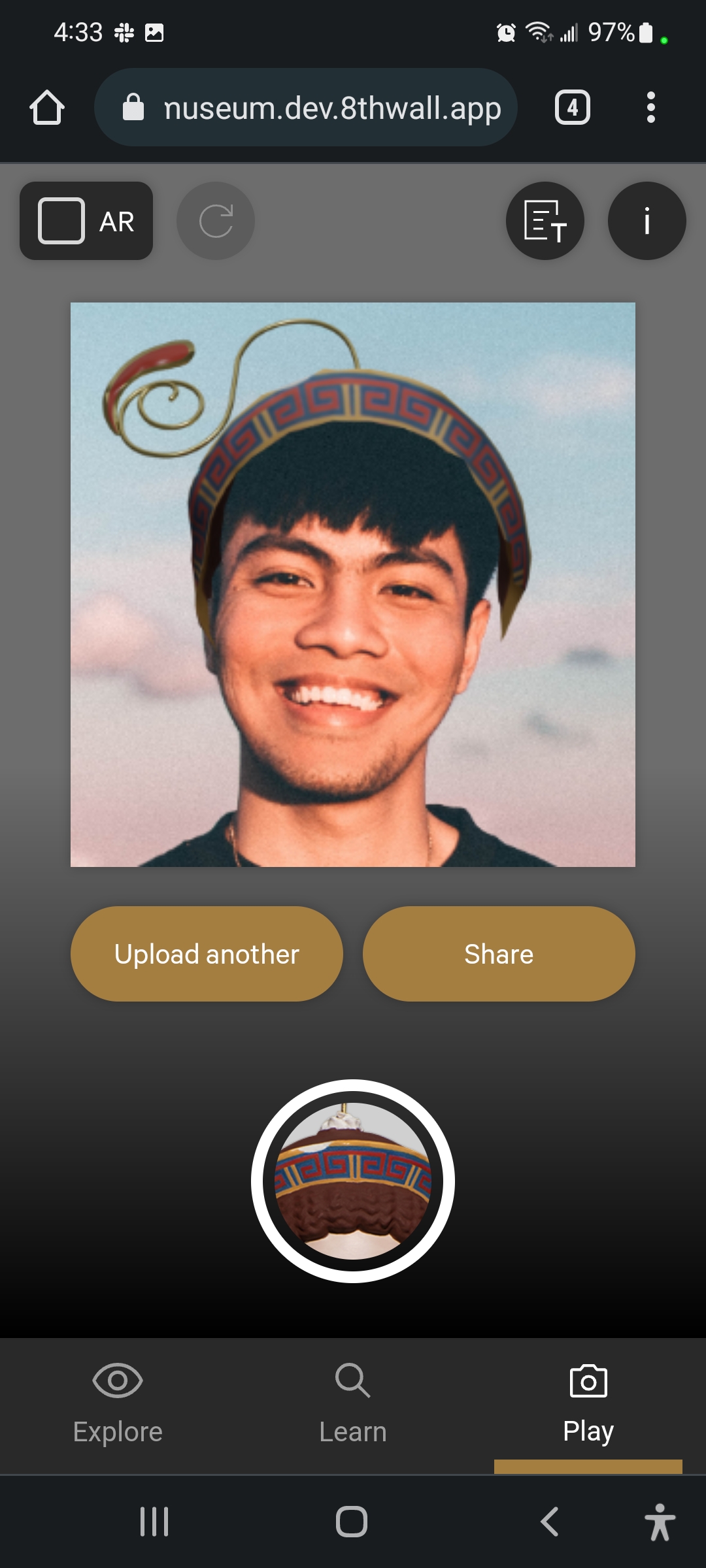

We couldn’t pass up the opportunity to add a classic AR feature – selfie filters! In this mode the app switches to the front-facing camera and attaches different 3D objects from the real 3D scanned sculptures to the user’s head using 8th Wall’s face tracking.

“No camera” mode

8th Wall’s WebAR engine is very computationally expensive, so even the newest and fastest phones will heat up and drain battery very quickly. Nobody wants to run out of battery while they’re in the middle of Manhattan (where The Met is located), so we felt it was important that AR could be turned off at any time by the user and still give them a great experience.

8th Wall’s WebAR engine is very computationally expensive, so even the newest and fastest phones will heat up and drain battery very quickly. Nobody wants to run out of battery while they’re in the middle of Manhattan (where The Met is located), so we felt it was important that AR could be turned off at any time by the user and still give them a great experience.

We also recognized that not all users would want to grant camera and sensor permissions for privacy reasons, and some users might not even have a functional camera. Other users may have perfectly functional devices, but have a disability or impairment that makes it uncomfortable or impossible to move or interact with their phone to get to the AR content.

That’s why we added an AR toggle button at the top of the app, allowing users to interact with all the same content (including the 3D models) as if it were just a normal 2D website.

Explore and Learn modes

Both of these modes focus on allowing users to freely examine 3D models from the exhibition to discover interesting features and information. For these modes we implemented a classic model viewer UI with gestures and buttons for zooming and rotating the model.

Play mode

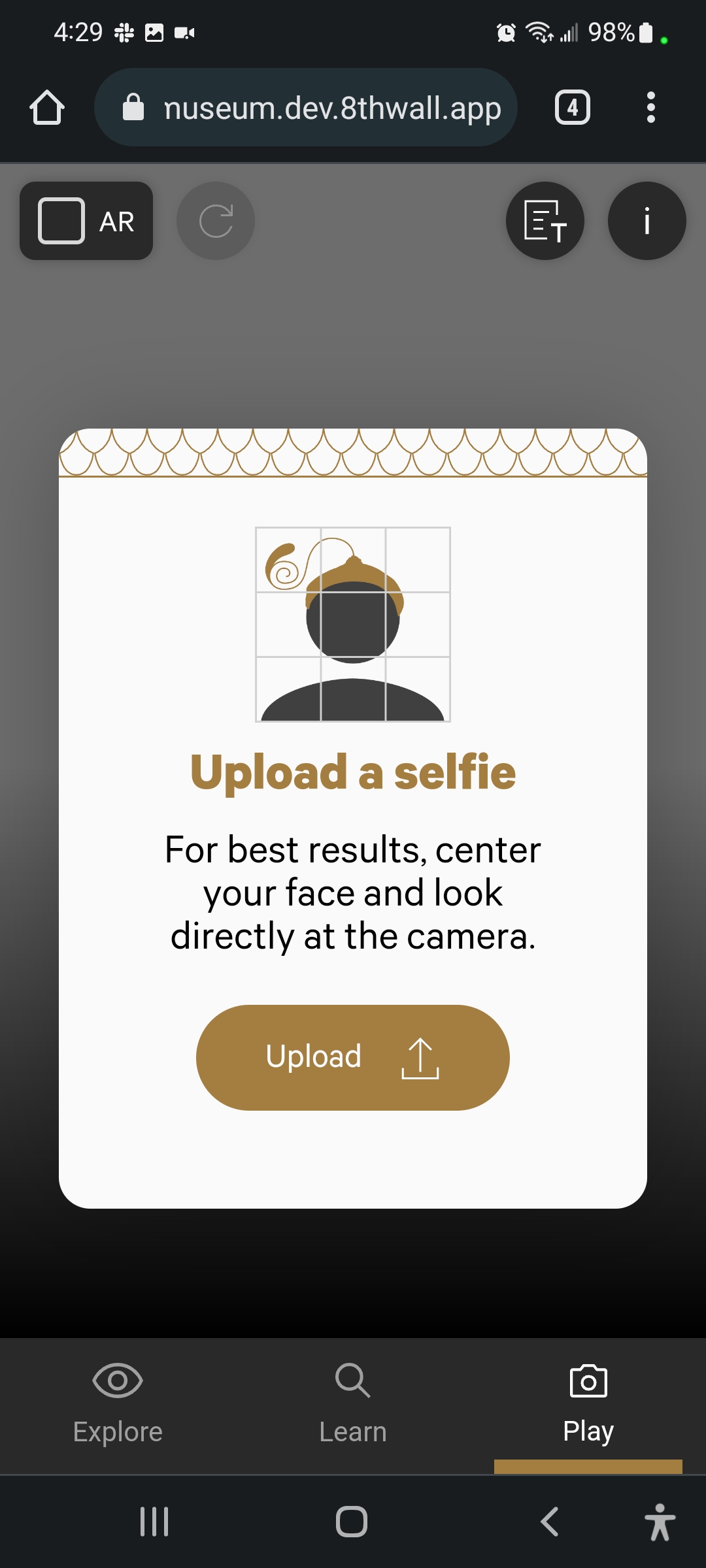

This mode was more about seeing a 3D object overlaid on your own face, so we felt a full 3D model viewer wasn’t necessary. Instead we allow users to upload a selfie image they’ve already taken on their device and see the 3D objects overlaid on them automatically.

We decided not to use face detection here and instead always place the 3D model in the same exact place for every image. Instructions were provided to guide users to frame their face correctly, though in practice this may not have been as effective as would like.